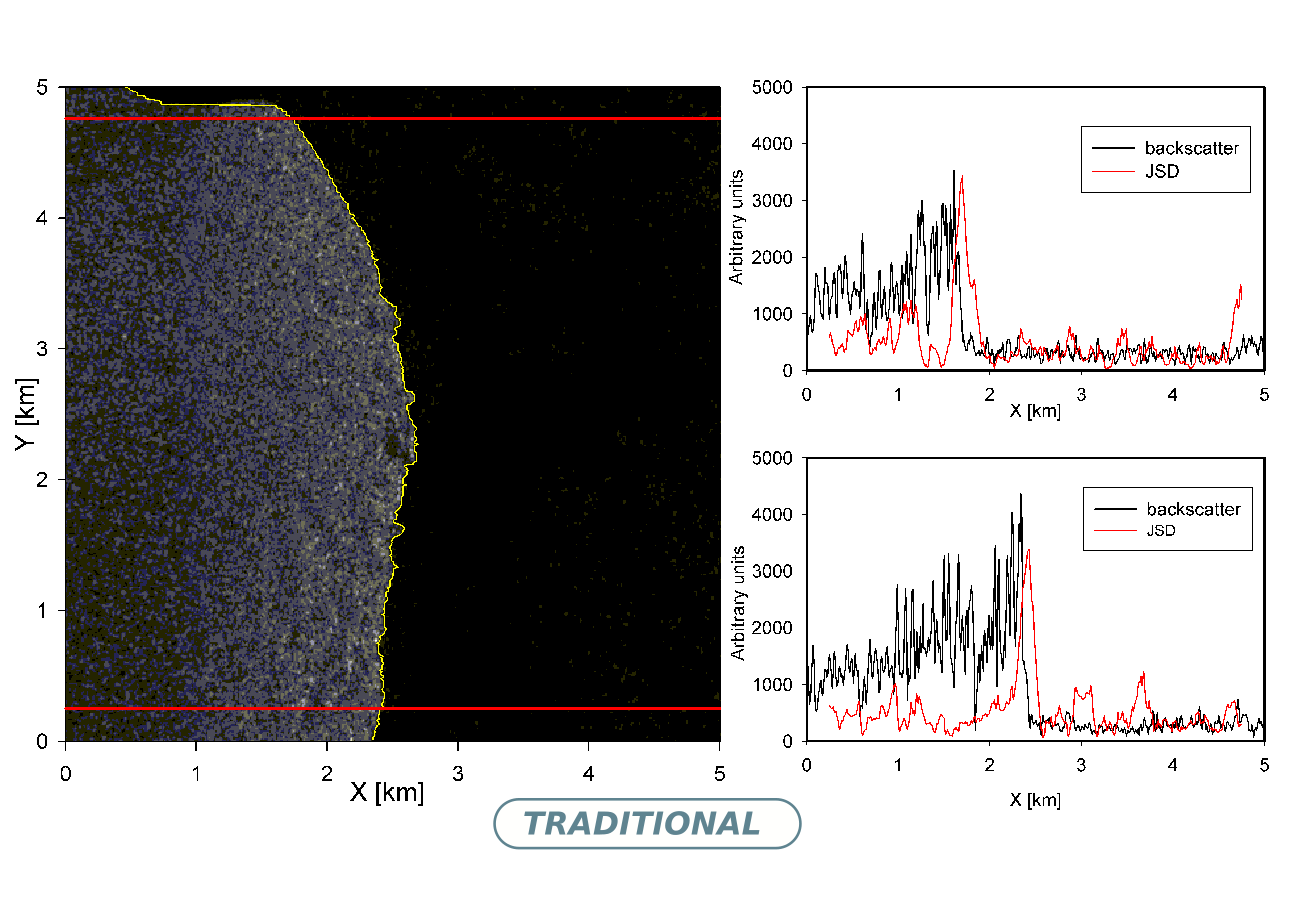

A method for continuous-range sequence analysis with Jensen-Shannon divergence

DOI:

https://doi.org/10.4279/pip.130001Keywords:

entropic distance, sequence segmentation, Jensen-Shannon divergenceAbstract

Mutual Information (MI) is a useful Information Theory tool for the recognition of mutual dependence between data sets. Several methods have been developed fore estimation of MI when both data sets are of the discrete type or when both are of the continuous type. However, MI estimation between a discrete range data set and a continuous range data set has not received so much attention. We therefore present here a method for the estimation of MI for this case, based on the kernel density approximation. This calculation may be of interest in diverse contexts. Since MI is closely related to the Jensen Shannon divergence, the method developed here is of particular interest in the problems of sequence segmentation and set comparisons.

Downloads

Published

How to Cite

Issue

Section

License

Authors agree to the PIP Copyleft Notice